Optimizing Latency for Real-Time AI: A Practical Guide to Edge Hosting in the UK & EU

In the race to deploy sophisticated Artificial Intelligence (AI), speed is the new currency. For many modern applications, the intelligence of a model is only as valuable as the speed at which it can deliver an answer. Whether it’s a self-driving car identifying a pedestrian or a high-frequency trading algorithm executing a trade, milliseconds matter.

This necessity has exposed a critical bottleneck in traditional centralized cloud infrastructure. While centralized data centers are powerful, they are often physically distant from end-users. For a user in London interacting with a server in Virginia, the speed of light imposes a hard limit on performance. This latency is unacceptable for real-time AI.

The solution lies in moving the compute power closer to the source of the data: the edge. By leveraging edge hosting, businesses in the UK and EU can drastically reduce latency, ensure compliance with strict data regulations, and unlock the full potential of real-time AI.

In this guide, we will explore the architecture, benefits, and optimization strategies for deploying real-time AI at the edge, specifically tailored for the UK and European markets. You will learn how to build infrastructure that is not only faster but also more secure and compliant.

What Is Edge Hosting and Edge Computing?

To understand how to optimize for speed, we must first define the infrastructure. Edge computing refers to a distributed computing framework that brings enterprise applications closer to data sources such as IoT devices or local edge servers.

Edge hosting, therefore, is the deployment of servers and services in data centers that are geographically closer to end-users compared to the massive “hyperscale” regions operated by major cloud providers.

The Difference Between Edge and Cloud

The core difference lies in location and purpose. Centralized cloud computing consolidates processing power in a few massive data centers. It excels at batch processing and storage but struggles with the latency required for real-time interactions.

Edge hosting distributes that power across many smaller locations. If you are serving users in Manchester, Paris, and Berlin, edge hosting allows you to place compute resources in or near those specific cities rather than routing all traffic to a single data center in Dublin. This proximity is the fundamental mechanism for reducing latency.

Why Real-Time AI Requires Ultra-Low Latency

Real-time AI latency is the time elapsed between a user’s input and the model’s output. In high-stakes environments, high latency breaks the illusion of intelligence and utility.

Tight Decision Loops

Consider autonomous systems or robotics. These systems operate on tight decision loops where the output of one process immediately feeds into the input of the next. If the inference step takes too long due to network lag, the system fails to react in time. Low latency AI applications require near-instantaneous processing to function safely and effectively.

User Experience (UX)

For consumer-facing applications like voice assistants or real-time translation, delays of even a few hundred milliseconds can feel unnatural. Users perceive a delay of 100ms as instantaneous, while delays over 300ms feel sluggish. Edge hosting helps keep response times within that “instant” perception window.

Data Freshness

In financial markets or dynamic pricing engines, the value of data decays rapidly. AI models need to make inferences based on the absolute latest data. Edge infrastructure ensures that the data ingestion and processing pipeline is as short as possible.

Latency Sources in AI Infrastructure

Optimizing for speed requires identifying where time is lost. There are three primary culprits in AI infrastructure:

- Network Hops: Every time data passes through a router or switch, latency is introduced. Sending data across the Atlantic involves dozens of hops. Edge hosting minimizes this by physically shortening the distance and reducing the number of network devices the data must traverse. Network latency optimization focuses on finding the most direct path.

- Storage I/O: Reading the model from a disk and loading it into memory takes time. Slow storage can bottleneck even the fastest GPUs.

- Model Loading and Inference: The time it takes for the hardware to actually process the data. This AI inference latency is dependent on compute power and software optimization.

Benefits of Edge Hosting in the UK and EU

Deploying edge infrastructure specifically within the UK and Europe offers distinct advantages beyond just speed.

Proximity to Users

Europe is densely populated with high internet penetration. Edge hosting in the UK and edge hosting in Europe allow you to place workloads within milliseconds of millions of users. A server in London can reach most of the UK population in under 10ms, a feat impossible for a server located in the US.

Data Sovereignty and Governance

Data sovereignty is a critical concern. Keeping data within national borders is often a legal requirement, not just a preference. Edge nodes allow you to process data locally without it ever leaving the country of origin, simplifying compliance with national laws.

Regulatory Compliance

The EU has some of the strictest digital privacy regulations in the world. Using local edge providers helps ensure that your infrastructure aligns with these frameworks, reducing the legal risks associated with international data transfers.

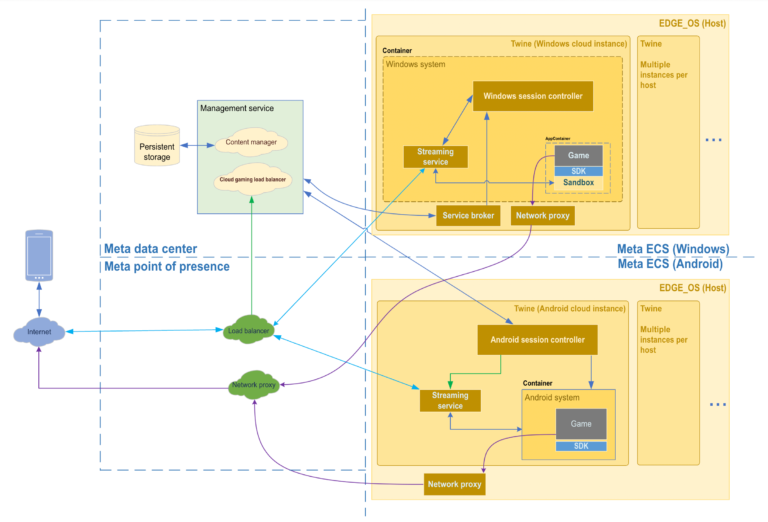

Reference Architecture for Real-Time AI at the Edge

Building a low latency hosting architecture requires a tiered approach. It is rarely “all edge” or “all cloud,” but rather a hybrid model.

1. Edge Nodes (Inference)

These are your frontline servers located in regional data centers (e.g., London, Frankfurt, Amsterdam). They host the trained AI models and handle real-time inference requests. They are optimized for speed and high throughput.

2. Regional Aggregation

Slightly further back, you might have regional hubs that aggregate data from multiple edge nodes. These can handle tasks that require more compute power but are less time-sensitive, such as retraining lightweight models or aggregating logs.

3. Core Cloud (Training)

The centralized cloud is still the best place for heavy lifting. This is where you store historical data and train your massive foundation models. Once a model is trained, it is pushed out to the edge AI architecture for deployment.

Hardware and Network Optimization Strategies

Software can only run as fast as the hardware allows. To achieve true high performance edge servers, you must carefully select your components.

GPU vs. CPU for Inference

For simple decision trees, high-frequency CPUs may suffice. However, for deep learning and generative AI, GPUs are non-negotiable. Edge servers equipped with specialized inference GPUs (like NVIDIA T4s or A10s) offer the parallelism needed to process complex inputs quickly.

NVMe Storage

Traditional SSDs can be a bottleneck when loading large models into memory. Low latency networking must be paired with NVMe storage, which connects directly to the PCIe bus, drastically reducing I/O latency.

Direct Peering

Internet routing is unpredictable. To guarantee speed, look for edge providers that offer direct peering with major ISPs and internet exchanges (like LINX in London or DE-CIX in Frankfurt). This ensures your data takes the shortest possible path to the end-user, bypassing congested public internet routes.

Software Optimization for Edge AI Workloads

Once the hardware is set, you must tune the software to optimize AI inference latency.

Model Quantization

Most models are trained using 32-bit floating-point numbers. However, inference often doesn’t need that level of precision. Quantization converts these to 8-bit integers, shrinking the model size and significantly speeding up execution with minimal loss in accuracy. This is crucial for edge AI optimization.

Caching Strategies

Not every request needs a fresh inference. Implementing intelligent caching at the edge allows you to serve pre-computed answers for common queries instantly.

Container Optimization

Using containerization (like Docker or Kubernetes) is standard, but stock images are often bloated. specialized edge operating systems and stripped-down container runtimes can shave vital milliseconds off startup times (cold starts) and improve resource utilization.

Scaling Edge Infrastructure Across Regions

As your user base grows, so must your footprint. Scaling edge computing presents unique challenges compared to scaling a centralized cloud.

Orchestration

Managing ten servers in one data center is easy; managing one server in ten different data centers is hard. You need a centralized orchestration plane (often Kubernetes-based) that can deploy updates to all edge nodes simultaneously.

Traffic Routing and Geo-DNS

How does a user in Manchester get routed to the Manchester node and not the London node? Geo-DNS services answer DNS queries with the IP address of the server physically closest to the user.

Failover Strategies

Multi-region edge hosting provides resilience. If the Paris node goes offline, traffic must automatically reroute to the next closest node (perhaps Brussels or Frankfurt) without disrupting the service.

Security, Privacy, and Compliance in the EU

In Europe, performance cannot come at the expense of privacy. GDPR compliant edge hosting is a legal necessity.

GDPR and Data Minimization

Edge computing supports GDPR principles by enabling data minimization. You can process raw data (like video feeds) at the edge, extract only the necessary metadata (e.g., “count: 5 people”), and discard the personally identifiable raw data immediately. This significantly reduces your compliance burden.

Data Residency

By utilizing secure edge computing nodes within specific borders, you ensure that sensitive citizen data never crosses international lines, satisfying data residency laws in countries like Germany and France.

Encryption

Data must be encrypted both in transit and at rest. Edge nodes should utilize hardware-based security modules (HSMs) or Trusted Platform Modules (TPMs) to manage encryption keys securely, ensuring that physical access to the server does not grant access to the data.

Cost Considerations and ROI of Edge Hosting

While edge hosting cost structures differ from the cloud, the Return on Investment (ROI) for AI workloads is often compelling.

Bandwidth Savings

Sending terabytes of raw video or sensor data to a central cloud is expensive. By processing data at the edge, you only send the insights back to the core. This can reduce bandwidth costs by orders of magnitude, helping to reduce cloud latency costs and egress fees.

Hardware Amortization

Unlike the endless rental model of the cloud, many edge providers offer bare-metal servers. For steady-state workloads, renting bare metal is often significantly cheaper per compute cycle than on-demand virtual instances.

Real-World Use Cases for Edge AI in Europe

Real-time AI applications are already transforming industries across the continent.

Smart Cities

Cities like Amsterdam and Barcelona use edge AI use cases to manage traffic flow. Cameras process video feeds locally to adjust traffic lights in real-time, reducing congestion and emissions without sending sensitive video data to a central cloud.

Fintech in London

High-frequency trading platforms in London utilize edge servers located physically close to the London Stock Exchange. The reduced latency allows for faster execution of trades, providing a competitive edge where microseconds equate to millions in revenue.

Manufacturing in Germany

German automotive factories use computer vision at the edge to inspect parts on the assembly line. Defects are spotted instantly, and the machinery is adjusted in real-time, reducing waste and ensuring quality control.

How to Choose an Edge Hosting Provider in the UK & EU

Selecting the right partner is critical. When evaluating a best edge hosting provider UK or edge cloud Europe, consider the following:

- Location Coverage: Do they have data centers in the specific cities where your users are? A provider with only one location in Dublin is not a true edge provider for a user in Munich.

- Network Quality: Ask about their peering arrangements. Do they connect directly to local ISPs?

- Compliance Certifications: Ensure they have ISO 27001 certifications and fully understand GDPR requirements.

- Hardware flexibility: Can you request specific GPUs or storage configurations?

FAQ – Edge Hosting for Real-Time AI

Q1: What latency can edge hosting achieve?

Edge hosting can typically achieve single-digit millisecond latency (1-5ms) for users within the same metropolitan area. This is a significant improvement over the 20-50ms+ often seen with centralized cloud architectures.

Q2: Is edge hosting more expensive than cloud?

It depends on the workload. While initial setup complexity is higher, edge hosting often reduces bandwidth costs significantly. for high-throughput AI workloads, bare-metal edge servers can be more cost-effective than comparable cloud instances.

Q3: Does edge hosting improve AI inference speed?

Indirectly, yes. While the inference time itself depends on the hardware (GPU), edge hosting removes the network latency bottleneck. This means the total time from user request to response is drastically reduced.

Q4: How does edge hosting support GDPR compliance?

It allows you to keep data within the EU or within specific countries. It also enables you to process and anonymize sensitive data locally, so raw personal data never needs to be transmitted or stored centrally.

Q5: Which AI workloads benefit most from edge computing?

Workloads that require real-time decision-making, such as autonomous vehicles, industrial robotics, video analytics, augmented reality (AR), and real-time voice processing.

Q6: How many edge locations are needed in Europe?

This depends on your user distribution. To cover the major economic hubs effectively, a deployment across 3-5 key cities (e.g., London, Frankfurt, Paris, Amsterdam, Stockholm) usually provides excellent coverage for the majority of the population.

Improving Your Infrastructure

The shift to the edge is not just a trend; it is the necessary evolution of infrastructure to support the next generation of AI. For technical leaders in the UK and EU, optimizing latency is no longer optional—it is a competitive requirement.

By understanding the architecture, selecting the right locations, and optimizing both hardware and software, you can build a foundation that delivers true real-time intelligence.

Ready to reduce your latency and take control of your AI infrastructure? Evaluate your current deployment today and discover how edge hosting can transform your application’s performance.