Scaling AI Agents in 2026: A Practical Blueprint for CTOs and Engineering Leaders

We have moved past the era where AI agents were mere novelties or experimental chat interfaces. By 2026, AI agents—autonomous software capable of reasoning, planning, and executing complex workflows—will have transitioned from R&D sandboxes to critical enterprise infrastructure. For CTOs and engineering leaders, this shift presents a new set of architectural and operational challenges.

It is no longer enough to build a prototype that works in a controlled environment. The real test lies in deploying thousands of concurrent agents that can reliably interact with internal APIs, external services, and human operators without breaking the bank or compromising security.

This blueprint addresses the specific hurdles of scaling AI systems. It moves beyond the basics of prompt engineering to focus on the hard engineering problems: latency management, cost control, robust orchestration, and observability. If your organization is preparing to move from pilot programs to production-scale multi-agent systems, this guide provides the architectural roadmap you need.

What Are AI Agents and Multi-Agent Systems?

To architect a scalable system, we must first define the workload. AI agents explained simply are software entities powered by large language models (LLMs) that can perceive their environment, reason about how to achieve a goal, and take actions to fulfill that goal.

Unlike traditional automation scripts that follow a linear if-this-then-that logic, AI agents can handle ambiguity. They can retry failed steps, break down complex instructions into sub-tasks, and access tools like databases or APIs dynamically.

Multi-agent systems take this a step further. In these environments, multiple specialized agents collaborate to solve problems. For example, in a software development workflow, one agent might write code, another might write tests, and a third might review the security implications. These agents pass messages and context between each other, creating a complex web of interactions that mimics a human team.

Understanding this distinction is critical because scaling a single chat interface is fundamentally different from scaling a mesh of autonomous agents that need to maintain state, share context, and coordinate actions asynchronously.

Why Scaling AI Agents Is Hard

Moving from a single demo to enterprise-grade production introduces friction points that traditional microservices architectures aren’t always equipped to handle.

Latency Bottlenecks

LLMs are computationally expensive and slow compared to traditional database queries. When you chain multiple agents together, latency compounds. If Agent A waits 3 seconds for an inference, passes it to Agent B which waits another 4 seconds, the user experience quickly degrades. Scaling AI systems requires aggressive optimization of inference times and clever caching strategies.

Cost Explosion

Token costs accumulate rapidly. An agent that enters an infinite loop of reasoning, or a multi-agent system that chatters excessively between nodes, can burn through budget in minutes. Enterprise AI challenges often revolve around predicting and controlling these variable costs without stifling the agents’ ability to perform.

Orchestration Complexity

Managing state across long-running, non-deterministic workflows is difficult. If an agent fails halfway through a multi-step process, how do you recover? Do you restart from the beginning, or can you resume from the last known good state? Orchestration at scale requires sophisticated state management that goes beyond standard load balancing.

Data Governance

Agents need access to data to be useful, but giving them unfettered access is a security nightmare. Implementing granular access control (RBAC) for autonomous agents—ensuring they only access the data they need for a specific task—adds a layer of complexity to your data governance strategy.

Core Architecture for Scalable AI Agents

To handle these challenges, your architecture needs to be modular and resilient. A monolithic approach will likely fail under the weight of concurrent agent sessions.

Microservices Design

Adopting a microservices approach allows you to decouple the agent logic from the underlying model serving infrastructure. You might have a “Planning Service” that handles the high-level reasoning, and separate “Tool Services” that agents call to execute tasks. This separation allows you to scale the compute-heavy inference layer independently of the lightweight tool execution layer.

Event-Driven Workflows

Synchronous HTTP requests are often ill-suited for long-running agent tasks. Scalable AI architecture relies heavily on event-driven patterns. Using message queues (like Kafka or RabbitMQ) allows agents to publish events (e.g., “Task Analyzed”) and subscribe to others (e.g., “Code Generated”). This asynchronous model improves resilience; if a service goes down, the message persists until it can be processed.

Model Serving Layers

Directly hitting OpenAI or Anthropic APIs works for prototypes, but for scale, you need a robust gateway or model serving layer. This layer handles rate limiting, failover (switching providers if one goes down), and semantic caching (returning cached answers for similar queries to save costs). AI architecture design must treat model inference as a commodity resource that can be swapped or routed dynamically.

Infrastructure Choices: Cloud, Bare Metal, Hybrid

The underlying hardware you choose defines your performance ceiling and your cost floor.

GPU vs. CPU Workloads: While inference demands GPUs, much of the “glue” code—vector database lookups, API calls, prompt formatting—runs efficiently on CPUs. A smart architecture separates these workloads to maximize resource utilization.

Cost Predictability: Public cloud GPU instances are convenient but expensive at scale. For steady-state workloads, AI infrastructure scalability often favors reserved instances or even bare-metal GPU clusters to lock in lower costs.

Network Performance: In a multi-agent system, the volume of internal traffic (agents talking to agents) can be massive. Low-latency networking within your cluster is non-negotiable.

Orchestration and Automation at Scale

How do you manage thousands of agents spinning up and down?

Kubernetes for AI Workloads

Kubernetes has become the de facto standard for managing containerized applications, and it serves AI workloads well. Its ability to auto-scale pods based on custom metrics (like queue depth or GPU utilization) ensures that you have enough capacity during peak hours without paying for idle time at night.

Auto-Scaling Strategies

Standard CPU-based auto-scaling fails for AI. You need to scale based on “inference pressure” or “token throughput.” Advanced AI orchestration platforms integrate with Kubernetes to scale the number of agent replicas dynamically based on the complexity of current tasks, not just CPU usage.

Job Scheduling

Not every agent task needs to happen now. A robust job scheduler can defer lower-priority background tasks (like summarizing daily logs) to off-peak hours when compute is cheaper or more available.

Data Pipelines and Model Lifecycle Management

Data is the lifeblood of your agents. The MLOps pipeline ensures that this blood keeps flowing cleanly.

Training and Fine-Tuning

Even if you primarily use off-the-shelf models, you will likely need to fine-tune smaller models for specific tasks to reduce latency and cost. Your architecture must support automated pipelines that ingest new data, retrain models, and evaluate them against benchmarks before deployment.

Versioning and Rollbacks

Model behavior changes. A prompt that worked yesterday might fail today if the underlying model drifts or if a new model version is released. Strict versioning of both models and prompts is essential. If a deployment causes a spike in agent hallucinations, your model lifecycle management system must allow for an instant rollback to the previous stable configuration.

Observability, Monitoring, and Reliability

You cannot fix what you cannot see. Observability for AI systems is distinct from traditional APM (Application Performance Monitoring).

Latency Tracking: You need to trace the request not just through your microservices, but through the “thought process” of the agent. How long did it spend planning? How long waiting for the vector DB? How long generating the final token?

Cost Monitoring: Real-time visibility into token usage per tenant or per feature is vital. You should be able to see exactly which agent workflow is driving up your bill.

Failure Recovery: When an agent gets stuck in a loop or hallucinates, you need circuit breakers that detect this behavior and kill the process or redirect it to a human operator. AI monitoring tools must be tuned to detect semantic failures, not just system crashes.

Security, Privacy, and Compliance

As agents gain autonomy, the blast radius of a security breach increases.

Data Protection: Ensure that data fed into the context window is sanitized. PII (Personally Identifiable Information) redaction should happen before the data ever leaves your secure enclave.

Access Control: Apply the Principle of Least Privilege. An agent designed to schedule meetings should not have read access to the payroll database. AI security best practices dictate that every agent operates with a unique identity and scoped permissions.

Regulatory Alignment: For industries like finance or healthcare, enterprise AI compliance requires audit trails. You must be able to reconstruct exactly why an agent made a specific decision, which means logging the inputs, the prompt, and the model’s reasoning trace.

Cost Optimization and Capacity Planning

AI infrastructure cost optimization is a continuous process.

GPU Utilization: Idle GPUs are budget killers. Techniques like GPU fractionalization (splitting one GPU across multiple smaller workloads) can drastically improve efficiency.

Spot vs. Reserved: Use spot instances for fault-tolerant batch processing tasks (like nightly data summarization) and reserved capacity for customer-facing, real-time agents.

Budget Forecasting: Move away from linear forecasting. AI costs scale with complexity, not just user count. A complex query costs 10x more than a simple one. Your forecasting models need to account for this variability to effectively reduce AI cloud costs.

Scaling Team and Operating Model

Technology is only half the battle. You need the right people and processes.

Platform Engineering: Build a platform team dedicated to the “AI inner loop.” Their job is to provide the infrastructure (vector DBs, model gateways, monitoring) so that product teams can focus on building agent logic.

DevOps Maturity: Your AI engineering team structure should integrate data scientists with DevOps engineers. The days of throwing a Jupyter notebook over the wall to engineering are over.

Skills Roadmap: Invest in upskilling your team on prompt engineering, RAG (Retrieval-Augmented Generation) architectures, and non-deterministic testing strategies.

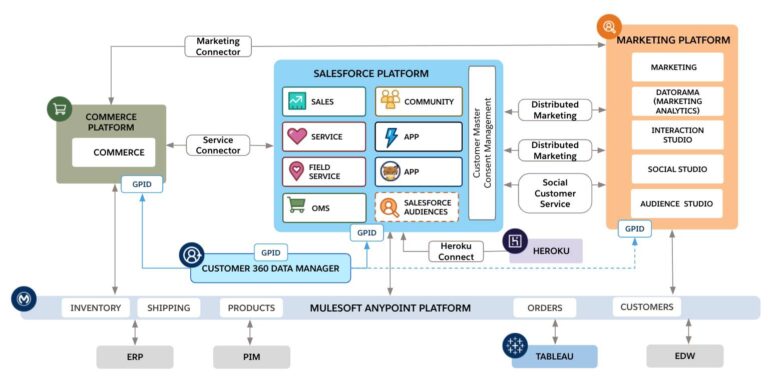

Reference Architecture Blueprint

A typical scalable stack for 2026 might look like this:

- Gateway Layer: API Gateway handling authentication, rate limiting, and request routing.

- Orchestration Layer: A temporal workflow engine (like Temporal or Cadence) managing long-running agent state.

- Cognitive Layer: Stateless agent services running on Kubernetes, communicating via gRPC.

- Memory Layer: A scalable vector database (like Milvus or Pinecone) for long-term semantic memory, alongside Redis for short-term session caching.

- Inference Layer: A mix of proprietary APIs (for complex reasoning) and self-hosted open-source models (for fast, cheap tasks) managed via a model gateway.

- Observability Plane: An ELK stack or similar specifically tuned to ingest and visualize trace data from agent interactions.

Common Scaling Mistakes CTOs Should Avoid

Overprovisioning: Buying massive GPU clusters before you understand your workload profile. Start with flexible cloud resources and optimize as you gather data.

Vendor Lock-in: Building your entire agent logic around the quirks of a specific model (e.g., GPT-4). Abstraction layers are your friend. Ensure you can swap models without rewriting your entire codebase.

Lack of Governance: Letting teams spin up agents without a centralized registry. This leads to “shadow AI,” unmonitored costs, and security gaps.

Roadmap for Scaling AI Agents (90-Day Plan)

Phase 1: Stabilize (Days 1-30)

Focus on observability. Instrument your current pilots to capture detailed metrics on latency, cost, and error rates. Establish a baseline. Implement basic guardrails for security and cost.

Phase 2: Optimize (Days 31-60)

Introduce caching and specialized models. Identify the most expensive or slowest 20% of queries and refactor them to use smaller, faster models or cached responses. Formalize your platform engineering strategy.

Phase 3: Scale (Days 61-90)

Roll out the event-driven architecture. Migrate to Kubernetes if you haven’t already. Implement auto-scaling policies based on the metrics gathered in Phase 1. Open the floodgates to more users.

FAQ – Scaling AI Agents

What does it mean to scale AI agents?

Scaling AI agents means transitioning from running a few isolated bot instances to managing thousands of concurrent, autonomous agents that can reliably execute complex workflows. It involves solving challenges related to latency, cost, state management, and infrastructure resilience to ensure consistent performance under high load.

What infrastructure is best for AI agents?

There is no single “best” infrastructure, but a containerized, microservices-based architecture managed by Kubernetes is the industry standard. It offers the flexibility to scale compute resources dynamically. A hybrid approach—using public cloud for burst capacity and reserved or bare-metal GPUs for steady-state inference—often yields the best balance of performance and cost.

How do CTOs control AI infrastructure costs?

Control costs by implementing strict budget alerts, using semantic caching to avoid redundant computations, and employing a “model cascade” strategy (using cheaper, faster models for simple tasks and reserving expensive models for complex reasoning). Regular audits of GPU utilization and spot instance usage also play a major role.

Can Kubernetes run AI agents at scale?

Yes, Kubernetes is highly effective for scaling AI agents. Its ability to manage container lifecycles, auto-scale based on custom metrics (like queue depth or GPU load), and handle self-healing makes it ideal for the dynamic nature of AI workloads.

How do you secure AI agent workloads?

Security involves multiple layers: sanitizing input data to prevent injection attacks, implementing strict Role-Based Access Control (RBAC) so agents only access necessary data, and maintaining comprehensive audit logs of all agent actions. Running agents in isolated environments (sandboxes) prevents them from accessing sensitive underlying infrastructure.

What are common mistakes when scaling AI systems?

Common mistakes include treating AI models like standard databases (ignoring latency), failing to abstract model dependencies (vendor lock-in), underestimating the complexity of state management in long-running workflows, and neglecting to implement robust cost observability from day one.

A Mandate for Action

The transition to AI-driven enterprise infrastructure is not just a technical upgrade; it is an operational paradigm shift. The architecture you build today will determine your organization’s agility for the next decade.

Do not wait for the perfect moment or the perfect model. The technology is moving too fast. Focus on building a resilient, adaptable foundation that can absorb new advancements as they arrive. Formalize your roadmap, empower your platform teams, and begin the work of scaling your AI ambitions into reality.