Edge Database Hosting in 2026: Bringing Your Queries Closer to Global Users

Two hundred milliseconds. That is the new threshold for user abandonment.

In 2026, the internet is no longer just about connectivity; it is about immediacy. Users in London expect the same application responsiveness as users in San Francisco, regardless of where the primary data center resides. For CTOs and engineering leads, the challenge isn’t just about writing efficient code—it’s about overcoming the laws of physics. Light can only travel so fast.

This is where edge database hosting transforms the architectural landscape. By moving data storage and query processing out of centralized hubs and onto the network edge, organizations can slash latency and deliver real-time experiences that were previously impossible.

In this deep dive, we explore why edge database hosting has become the standard for high-performance applications in 2026. We will examine the architecture, compare it to traditional cloud models, and provide a framework for choosing the right provider for your global needs.

Why Edge Database Hosting Matters in 2026

The centralization of data was the defining characteristic of the cloud era (circa 2010-2020). We built massive data centers in Virginia, Frankfurt, and Tokyo, and we routed the world’s traffic to them. But as applications became more data-intensive and user bases became truly global, the limitations of this model became undeniable.

If a user in Berlin queries a database hosted in Northern Virginia, that request must cross the Atlantic twice. In the best-case scenario, this adds significant latency. In worst-case scenarios involving packet loss or congestion, the application feels sluggish or broken.

Edge database hosting solves this by distributing data replicas across hundreds of points of presence (PoPs) worldwide. It brings the data to the user, rather than forcing the user to travel to the data.

For SaaS platforms, e-commerce giants, and AI-driven applications, low-latency databases are no longer a “nice to have”—they are a competitive necessity. The ability to serve a read query in 15ms instead of 150ms directly correlates to higher conversion rates, better user retention, and improved SEO rankings.

What Is Edge Database Hosting?

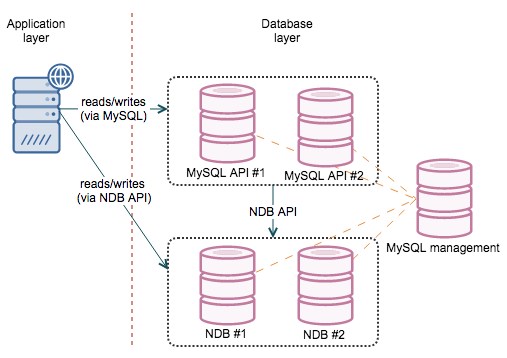

At its core, edge database hosting is a distributed computing paradigm that places database instances closer to the end-user. Unlike traditional cloud databases that live in a single region (or a primary region with a few read replicas), edge databases operate on a global mesh network.

Definition and Core Architecture

In a centralized architecture, your application server might live on the edge, but it still has to make a round trip to a central database to fetch data. This is often called the “edge sandwich” problem—fast compute, slow data.

Edge databases eliminate this bottleneck. The architecture typically involves:

- Global Replication: Data is automatically replicated across multiple nodes worldwide.

- Smart Routing: Incoming queries are routed to the nearest healthy node.

- Local Execution: Read queries are executed locally, minimizing network travel time.

Edge vs. Centralized Database Hosting

The fundamental difference lies in topology. Centralized databases prioritize consistency and ease of management by keeping everything in one place. Edge databases prioritize availability and partition tolerance (AP in the CAP theorem), ensuring that even if one region goes down, the rest of the network continues to serve traffic.

By 2026, advancements in distributed consensus algorithms have made edge computing databases surprisingly robust in maintaining data consistency, blurring the lines between traditional SQL guarantees and distributed performance.

How Edge Databases Reduce Latency for Global Users

The primary value proposition of edge database hosting is speed. But how exactly is that speed achieved?

Query Routing and Data Locality

When a user in Munich opens an application, a traditional setup might route their request to a server in Dublin. With an edge database, the DNS resolves to a node potentially within Munich itself or a nearby hub like Frankfurt.

The query doesn’t traverse the public internet for thousands of miles. It hops onto a private backbone network and hits a local database replica. This drastic reduction in physical distance is the most effective way to lower Time to First Byte (TTFB).

Impact on Page Load Time and API Response

For data-heavy applications, the database is often the bottleneck. You can optimize your frontend assets and use a CDN, but if your API takes 500ms to return a JSON payload because of database latency, the user experience suffers.

Global edge networks ensure that API responses are generated and delivered in near real-time. We are seeing innovative companies in 2026 achieve global API response times under 50ms, unlocking new possibilities for interactive, real-time user interfaces.

Edge Database Hosting vs. Cloud-Centralized Databases

Deciding between edge and cloud is one of the most critical infrastructure decisions a technical leader will make. Let’s break down the comparison.

| Feature | Centralized Cloud Database | Edge Database |

|---|---|---|

| Primary Location | Single Region (e.g., us-east-1) | Global (distributed across 30+ regions) |

| Read Latency | Low for local users, High for global | consistently Low globally |

| Write Latency | Low | Variable (depends on consistency model) |

| Complexity | Low to Medium | Medium to High |

| Ideal For | Monolithic apps, internal tools | Global SaaS, Real-time apps, AI |

Performance Comparison

Centralized databases win on raw write throughput for a single location. If all your users are in New York, a database in New York is unbeatable. However, for a distributed user base, the edge database offers superior read performance.

Cost and Operational Trade-offs

Edge vs. cloud databases also differ in cost structure. Centralized databases often have simpler pricing (instance size + storage). Edge databases frequently bill based on request volume and data transfer. For read-heavy workloads, edge can be more cost-effective as it offloads traffic from the primary origin. However, write-heavy workloads at the edge can become expensive due to the cost of replicating data across the mesh.

Key Use Cases Driving Edge Database Adoption

Adoption of real-time database hosting is being driven by specific industry needs that demand immediacy.

SaaS Platforms

Modern SaaS tools—project management, CRMs, collaborative docs—require multiplayer functionality. When two colleagues are editing a document, one in London and one in Sydney, latency kills the collaboration vibe. Edge databases allow both users to read and write changes with minimal delay, syncing the state in the background.

Gaming & Real-time Apps

In gaming, lag is the enemy. Edge infrastructure allows game state to be persisted closer to the player, ensuring that leaderboards, inventory management, and matchmaking data are retrieved instantly.

E-commerce & Personalization

Personalization engines need to know a user’s history now to recommend the right product now. If the database query takes too long, the page loads without the recommendation, and the opportunity is lost. Edge databases enable hyper-fast personalization queries, boosting average order value.

IoT and AI Inference

With billions of IoT devices online in 2026, sending every sensor reading to a central cloud is inefficient. Edge databases act as a localized buffer, storing and processing IoT data before syncing only the necessary aggregates to the core cloud. This is critical for industrial IoT and smart city infrastructure.

Edge Database Hosting and AI Workloads in 2026

Artificial Intelligence has reshaped infrastructure requirements. It’s not just about training models anymore; it’s about inference—running the model to get an answer.

Supporting AI Inference at the Edge

AI-ready databases at the edge are designed to store vector embeddings locally. When a user asks a chatbot a question, the system needs to retrieve relevant context (RAG – Retrieval Augmented Generation) to feed into the Large Language Model (LLM).

If the vector database is in Virginia and the user is in Singapore, the RAG process is slow. By hosting vector embeddings on the edge, the retrieval step happens instantly.

Reducing Model Response Times

Edge AI infrastructure allows for “split inference,” where smaller, faster models run on the edge alongside the database, handling common queries instantly. Only complex requests are routed to the central, massive models. This hybrid approach relies heavily on edge databases to maintain context and state between the edge and the core.

Data Consistency, Replication & Security Challenges

The move to the edge is not without its hurdles. Distributed systems are notoriously difficult to manage.

Eventual vs. Strong Consistency

The speed of light imposes hard limits. You cannot have instant updates across the globe without locking the database, which kills performance. Most edge databases utilize “eventual consistency.” When a record is updated in London, it might take a few hundred milliseconds to propagate to Tokyo.

However, in 2026, we are seeing the rise of “strong consistency at the edge” for specific transaction types, utilizing advanced algorithms like Paxos and Raft optimized for geo-distributed clusters.

GDPR and Regional Data Compliance

Data sovereignty is a major concern for EU businesses. GDPR compliant database hosting requires strict control over where data resides. A German user’s personal data should ideally stay on servers within the EU.

Advanced edge providers now offer “region pinning.” This allows architects to define policies such as “Data for users in the EU must never replicate to US nodes.” This feature is essential for navigating the fragmented regulatory landscape of 2026.

How to Choose the Right Edge Database Hosting Provider

Selecting a vendor for global database hosting requires a rigorous evaluation process.

Network Footprint (US, UK, EU)

Don’t just look at the number of PoPs; look at where they are. If your primary market is Germany, ensure the provider has deep density in Frankfurt, Berlin, and Munich, not just a single node in Amsterdam.

Uptime SLAs and Failover

Edge networks are complex. Failures happen. Look for providers that offer transparent SLAs (Service Level Agreements) and automatic failover. If the London node goes dark, traffic should seamlessly reroute to Paris or Dublin without manual intervention.

Pricing Models

Be wary of egress fees. Some providers charge heavily for moving data between regions. Look for transparent, predictable pricing models that align with your growth. “Serverless” pricing (pay per read/write) is often great for startups but can become costly at enterprise scale.

Future Trends: The Evolution of Edge Databases Beyond 2026

What comes next for the future of database hosting?

Serverless Edge Databases

We are moving toward true serverless at the edge. Developers will stop provisioning “nodes” or “clusters.” They will simply connect to an endpoint, and the infrastructure will elastically scale storage and compute based on real-time demand, scaling down to zero when idle.

Autonomous Replication and AI Optimization

Next-gen databases will use AI to manage themselves. The database will analyze traffic patterns and automatically decide where to replicate data. If it notices a spike in traffic from Brazil every Friday at 6 PM, it will pre-warm caches and replicate data to São Paulo nodes in anticipation, all without human intervention.

Bringing Queries Closer to Users Is No Longer Optional

The era of the monolithic, centralized database is ending for user-facing applications. The expectations of the global user base have simply outpaced what a single data center can deliver.

Edge database hosting in 2026 is about democratization—giving a user in rural England or a startup in Southeast Asia the same snappy, high-performance experience as a user in Silicon Valley. It is about architectural resilience, compliance, and readiness for the AI revolution.

For technical leaders, the mandate is clear: Audit your latency. If your data is static while your users are mobile, it is time to push your data to the edge.

Frequently Asked Questions (FAQ)

What is edge database hosting and how does it work?

Edge database hosting involves distributing database replicas across a global network of servers (the “edge”) rather than keeping them in a single central data center. It works by routing user queries to the geographically closest server, significantly reducing the distance data travels and ensuring faster response times.

Is edge database hosting better than cloud databases in 2026?

It depends on the use case. For global applications requiring low latency (like gaming, e-commerce, or SaaS), edge database hosting is generally better because it reduces load times. However, for internal, heavy-write applications or complex analytical workloads where all data needs to be in one place, a centralized cloud database may still be superior.

Which applications benefit most from edge databases?

Applications with a geographically distributed user base benefit the most. This includes global SaaS platforms, real-time multiplayer gaming, personalized e-commerce stores, content delivery apps, and IoT systems that require real-time data processing.

Are edge databases GDPR compliant for EU businesses?

Yes, modern edge database providers offer features like “region pinning” or “data residency controls.” This allows you to configure the database so that data belonging to EU citizens is stored and processed only on nodes located within the European Union, ensuring compliance with GDPR.

Does edge database hosting cost more than centralized hosting?

It can be more expensive if not managed correctly, due to data replication and transfer costs. However, for many read-heavy workloads, it can actually reduce costs by offloading expensive traffic from the primary origin server. It is crucial to evaluate the pricing model (request-based vs. provisioned) against your specific traffic patterns.

How does edge hosting improve AI and real-time performance?

Edge hosting enables AI inference to happen closer to the user. By storing vector embeddings and data context at the edge, AI models (like chatbots or recommendation engines) can retrieve necessary information in milliseconds rather than seconds, making real-time AI interactions feel instant and fluid.