Common Cloud Hosting Mistakes and How to Avoid Them

For businesses around the world, moving to the cloud feels like the ultimate efficiency hack. It promises scalability, speed, and freedom from clunky on-premise hardware. But for many, that dream quickly turns into a headache of unexpected bills, security breaches, and performance bottlenecks.

Cloud hosting is powerful, but it isn’t magic. It requires strategic planning and ongoing management. Unfortunately, many organizations jump in without looking, making avoidable errors that cost them dearly in time, money, and reputation.

Whether you are migrating your first application or managing a complex infrastructure, understanding where others have failed is the best way to succeed. By identifying these pitfalls early, you can build a cloud environment that is secure, cost-effective, and ready to grow with your business.

1. Choosing the Wrong Cloud Hosting Plan

One of the first stumbling blocks occurs before a single file is uploaded: selecting a plan that doesn’t match your reality.

It is easy to fall into the trap of either underestimating or overestimating your needs. Some businesses buy the most expensive tier “just in case,” wasting thousands on idle CPU cycles. Others try to save pennies by choosing a plan with insufficient RAM or storage, leading to crashes the moment traffic spikes.

Another issue is failing to plan for future growth. A static plan might work for a month, but if your marketing campaign goes viral, a rigid hosting environment can become a straitjacket.

How to Avoid It

The key here is flexibility. Don’t try to predict the next five years on day one. Start small with a plan that covers your immediate baseline needs. Most cloud providers allow you to scale resources up or down with a few clicks.

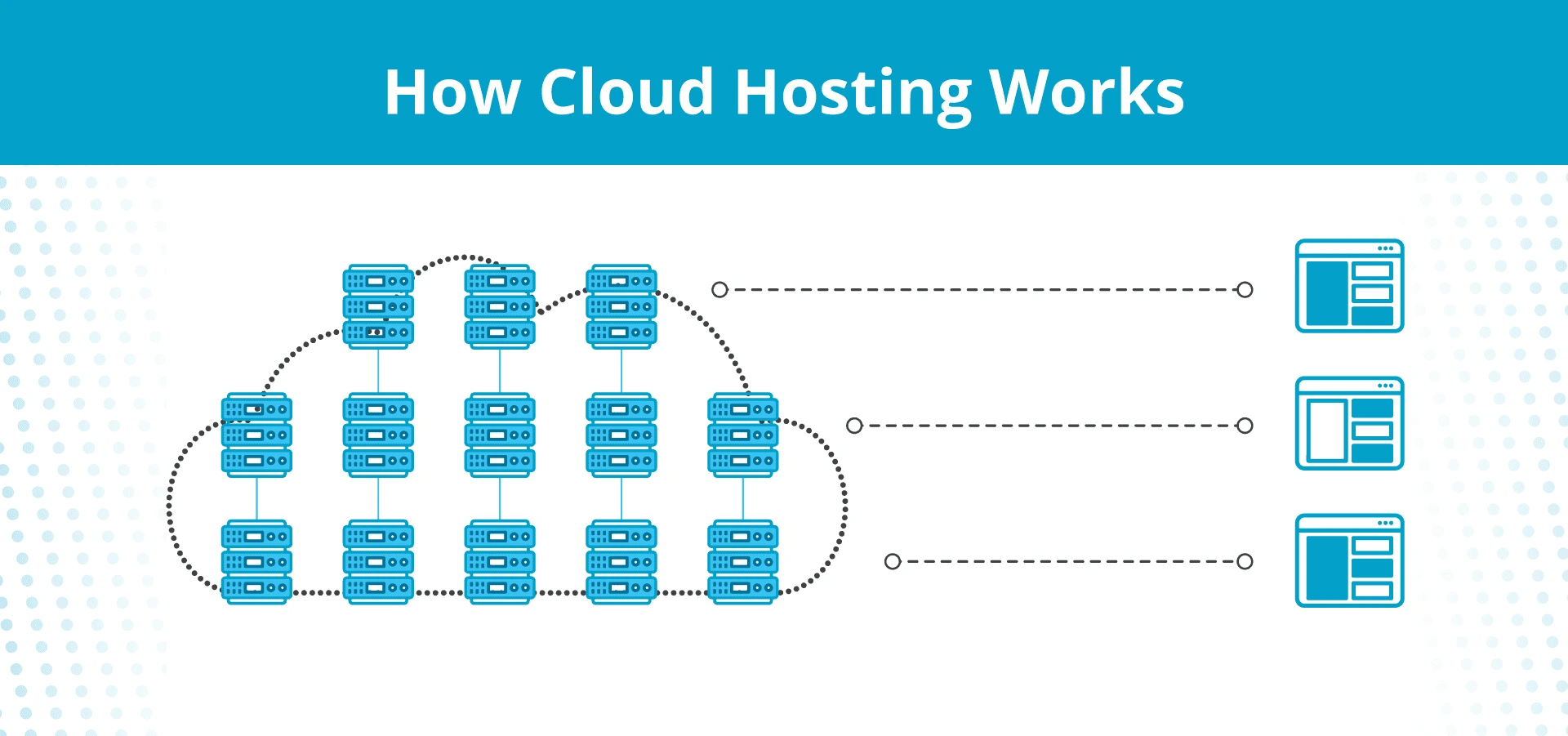

Monitor your resource usage closely during the first few weeks. Are you consistently hitting 90% CPU usage? Is your RAM barely touched? Use this data to adjust your plan. Choosing a provider that offers seamless vertical scaling (adding power to an existing server) or horizontal scaling (adding more servers) ensures you are never paying for more—or getting less—than you need.

2. Ignoring Cloud Pricing Models

The “pay-as-you-go” model is a major selling point of cloud computing, but it is also a double-edged sword. If you don’t understand exactly what you are paying for, the bill at the end of the month can be a shock.

Many beginners assume they are only paying for the server instance itself. However, cloud costs are multi-dimensional. You are often charged separately for:

- Data Transfer (Egress): Moving data out of the cloud to the internet.

- Storage Operations: The number of times your application reads or writes to the disk.

- Static IP Addresses: Reserving an IP, even if the server is off.

- Backups and Snapshots: Storing recovery points.

How to Avoid It

Before you commit, dig into the billing documentation. Understand the metrics used to calculate costs. Does the provider charge for incoming traffic? Is there a fee for load balancers?

Once you are up and running, set up usage alerts and budget caps immediately. Most major platforms (AWS, Google Cloud, Azure) allow you to set a monthly budget. If your spending is projected to exceed that amount, you receive an email. This simple step can save you from a “billing surprise” caused by a rogue script or a traffic surge.

3. Poor Security Configuration

Security in the cloud is a shared responsibility. The provider secures the physical data center and the hardware, but you are responsible for securing what you put in the cloud. A common mistake is assuming the default settings are secure enough.

Leaving ports open to the public internet, using weak passwords for root access, or failing to secure API keys are invitations for hackers. One of the most frequent causes of data leaks is an unsecured storage bucket (like an AWS S3 bucket) that allows anyone with the URL to download sensitive files.

How to Avoid It

Adopt a “least privilege” approach. Use strong Identity and Access Management (IAM) policies to ensure users and applications only have access to the specific resources they need.

Enable firewalls to block all traffic except what is absolutely necessary. For example, your database port should never be open to the entire internet; it should only accept connections from your application server. Finally, encrypt your data both at rest (on the disk) and in transit (as it moves over the network). Regular security audits should be part of your monthly routine.

4. Not Implementing Proper Backups

“The cloud never fails” is a dangerous myth. While cloud infrastructure is generally more reliable than a server in your closet, outages happen. Data centers lose power, fiber cables get cut, and hardware fails.

If you assume your provider is automatically backing up your data, you might be in for a rude awakening. While they ensure the durability of the infrastructure, they don’t always protect you from accidental deletion, corruption, or ransomware. If you accidentally delete your database, and you haven’t configured snapshots, that data is gone.

How to Avoid It

Treat your cloud data with the same paranoia you would treat local data. Schedule automated backups to occur daily (or even hourly, depending on your data volatility).

Crucially, do not just set it and forget it. You must test your restoration procedures. A backup is useless if it takes three days to restore or if the file is corrupted. Run a disaster recovery drill once a quarter to ensure you can get your systems back online quickly.

5. Overlooking Performance Optimization

You can have the most powerful server in the world, but if your configuration is poor, your website will still be slow.

A common mistake is serving all content directly from the web server. If a user in London visits a site hosted in San Francisco, the data has to travel halfway around the world. This latency kills user experience. Another issue is failing to implement caching. If your database has to query the exact same information for every single visitor, it creates a bottleneck.

How to Avoid It

Leverage a Content Delivery Network (CDN). A CDN stores copies of your static files (images, CSS, JavaScript) on servers located around the globe. When a user visits your site, they download these files from a server near them, drastically reducing load times.

On the server side, implement caching layers like Redis or Memcached. These tools store frequently accessed data in memory, allowing your application to retrieve it instantly without hitting the database. Finally, always choose a data center region that is geographically closest to the majority of your audience.

6. Failing to Enable Auto-Scaling

Traffic is rarely consistent. An e-commerce site might be quiet at 3 AM on a Tuesday but overwhelmed at 9 AM on Black Friday.

If you rely on manual scaling, you are playing a dangerous game. By the time you notice your site is slowing down and you manually upgrade the server, you have likely already lost customers. Conversely, if you keep a massive server running 24/7 to handle potential spikes, you are wasting money during quiet periods.

How to Avoid It

Configure auto-scaling rules. This feature allows your infrastructure to automatically add resources when demand rises (scale out) and remove them when demand falls (scale in).

Pair this with a Load Balancer. The load balancer acts as a traffic cop, distributing incoming requests evenly across your healthy servers. This ensures that no single server gets overwhelmed, keeping your application responsive regardless of traffic volume.

7. Lack of Monitoring & Alerts

Flying blind is a surefire way to crash. Many administrators don’t look at their server metrics until users start complaining that the site is down. By then, the damage is done.

Without visibility into resource usage, you can’t troubleshoot effectively. Is the site slow because the CPU is maxed out? Is the disk full? Is the database locked? Without historical data and real-time monitoring, you are just guessing.

How to Avoid It

Implement a robust monitoring solution. Tools like Prometheus, Datadog, or the provider’s native tools (like Amazon CloudWatch) provide deep visibility into your stack.

Set up alerts for critical thresholds. You should receive a notification if CPU usage stays above 80% for five minutes, or if disk space drops below 10%. Being proactive allows you to fix issues before they impact your end users.

8. Choosing Unmanaged Cloud Without Skills

Cloud providers offer two main types of services: managed and unmanaged.

Unmanaged hosting (Infrastructure as a Service) gives you a blank slate—a virtual machine with an operating system. You are responsible for installing software, patching security updates, and configuring the network. Beginners often choose this because it looks cheaper on paper. However, the technical complexity can be overwhelming. A misconfigured server is vulnerable and unstable.

How to Avoid It

Be honest about your team’s technical capabilities. If you don’t have a dedicated sysadmin or DevOps engineer, unmanaged hosting is likely a mistake.

Consider Managed Cloud Hosting or Platform as a Service (PaaS). With these options, the provider manages the underlying infrastructure, operating system updates, and security patches. You focus solely on your code and application. The slightly higher monthly fee pays for itself by freeing up your time and preventing costly configuration errors.

9. Vendor Lock-In

Vendor lock-in happens when you build your architecture so deeply into one provider’s proprietary tools that moving to another provider becomes technically difficult and prohibitively expensive.

For example, if you use a database service or a messaging queue that is unique only to Provider A, you can’t easily migrate to Provider B if prices rise or service quality drops. You are stuck.

How to Avoid It

Whenever possible, use open-source and portable technologies. Instead of using a proprietary database engine, use standard MySQL or PostgreSQL.

Containerization is your best friend here. By using tools like Docker and Kubernetes, you package your application and its dependencies into a container that can run on any cloud provider. This gives you leverage and flexibility. Always keep an exit strategy in mind—even if you never plan to use it.

10. Not Reading the SLA Carefully

The Service Level Agreement (SLA) is the contract that defines the reliability you can expect from your provider. A common mistake is assuming 100% uptime is guaranteed. It almost never is.

Furthermore, many people misunderstand how compensation works. If the provider fails to meet their uptime guarantee, they don’t automatically send you a check. You usually have to apply for a service credit within a specific timeframe, proving the downtime occurred.

How to Avoid It

Read the fine print. Understand exactly what “uptime” includes and what it excludes (scheduled maintenance is usually excluded).

Know the support tiers. Does your plan include 24/7 phone support, or only email support with a 48-hour response time? If your business runs critical applications, a cheap plan with poor support SLAs is a risk you cannot afford to take.

Building a Better Cloud Strategy

Avoiding these common mistakes is about shifting your mindset from “setting up a server” to “managing an ecosystem.” The cloud rewards those who plan ahead, monitor continuously, and prioritize security.

By choosing the right plan, understanding the costs, securing your data, and automating your operations, you turn cloud hosting from a liability into a competitive advantage.

Checklist: Cloud Hosting Best Practices

Before you launch your next project, run through this quick checklist to ensure you are covered.

- Plan Selection: Have I matched resources to my actual workload and planned for scaling?

- Budgeting: Do I understand the billing metrics and have I set up budget alerts?

- Security: Are firewalls active, ports closed, and IAM policies restricted?

- Backups: Are automated backups running, and have I tested a restore recently?

- Performance: Am I using a CDN and caching to reduce latency?

- Redundancy: Is auto-scaling enabled to handle traffic spikes?

- Monitoring: Do I have alerts set for CPU, RAM, and Disk usage?

- Support: Do I understand the SLA and have the right level of support access?

Frequently Asked Questions

Is cloud hosting risky for beginners?

Cloud hosting can be risky if you jump into unmanaged services without technical knowledge. However, if you choose managed hosting solutions where the provider handles the technical maintenance, it is very beginner-friendly. The key is to start with a platform that matches your skill level.

What is the biggest cloud hosting mistake?

The most damaging mistake is typically poor security configuration. Leaving databases exposed or neglecting IAM permissions can lead to data breaches that destroy a company’s reputation. Financial mistakes (like ignoring pricing models) are painful, but security mistakes can be fatal to a business.

How do I reduce cloud hosting costs?

To reduce costs, right-size your instances so you aren’t paying for unused power. Utilize “reserved instances” if you have predictable long-term needs, as these offer significant discounts over on-demand pricing. Finally, ensure you are cleaning up unused resources like unattached storage volumes and old snapshots.